TLDR; read this post if you want Llama 3 running locally on your ARM based Mac (aka M1, M2, M3) with inference speeds of 30+ tokens per second & GPU cores on full throttle.

Less than 2 weeks ago, AI @ Meta released Llama 3. Since then, I have been frantically working on getting the smallest, unquantized version (8B) running locally on my MacBook Pro with M1 Pro. Given that the model weights are in 16 bits (recall 8 bits = 1 bytes), this meant the 8B model should fit into 16GB of memory. So if you have an ARM based Mac (with their unified memory architecture) any configuration with strictly more than 16GB of unified memory should be able to comfortably run Llama 3 8B. If you have a Mac Studio with M2 Ultra and 192GB of unified memory you can easily run the 70B version as well (140GB of memory will be used). Not a single consumer GPU from AMD or NVIDIA can do this. In fact, not even a single A100 can do this 🤯 You’d need two A100s and even the cost of a single one is well over the $5,000 Apple charges for that beefed up Mac Studio.

Some background

All of this really started because I came across this 🔥 Medium post written by Mike Koypish. This guy decided to run a simple benchmark. Phi-2 on an ARM based MacBook using the status quo PyTorch, VERSUS (ding ding ding 🛎️) Apple’s recently release MLX “array framework”.

In case you haven’t heard of it before, MLX is like numpy but specifically built with Apple’s unified memory structure in mind as well as keeping in mind the data types the actual hardware supports (ex. M1 doesn’t support bfloat16 but newer versions do). Unlike numpy, MLX also servers as the building blocks for neural networks with modules such as nn and built in optimizers. In short, MLX == PyTorch + numpy.

Back to Mike. I encourage you to read his post and try the code yourself. He includes several notebooks to replicate the results. Despite have both computations on the GPU (in PyTorch it’s called “mps”), MLX is 3 times faster. By analyzing the hardware during computation, he finds that PyTorch cannot get the GPU cores on the MacBook to pick up their clock speeds past 554MHz while MLX unlocks the hardware’s full potential by getting the GPU cores to run at almost 1300MHz ⚡️

Given this obvious limitation of PyTorch, I said I quit and I’m going together get Llama 3 running on my MacBook Pro so that I can run my research experiments 3x faster 😎🪭

Setting up your environment

If you don’t already please setup conda or pyenv. I’m not going to argue on which is better or try to convince you on which to use. I personally use miniconda and it makes my life easy.

We will create a new Python 3.11 environment (I pray for my Googlers), and install MLX plus the MLX-LM package which comes with handy tools.

conda create -n mlx-3.11 python=3.11

# hit y and wait…

conda activate mlx-3.11

pip install mlx mlx-lm

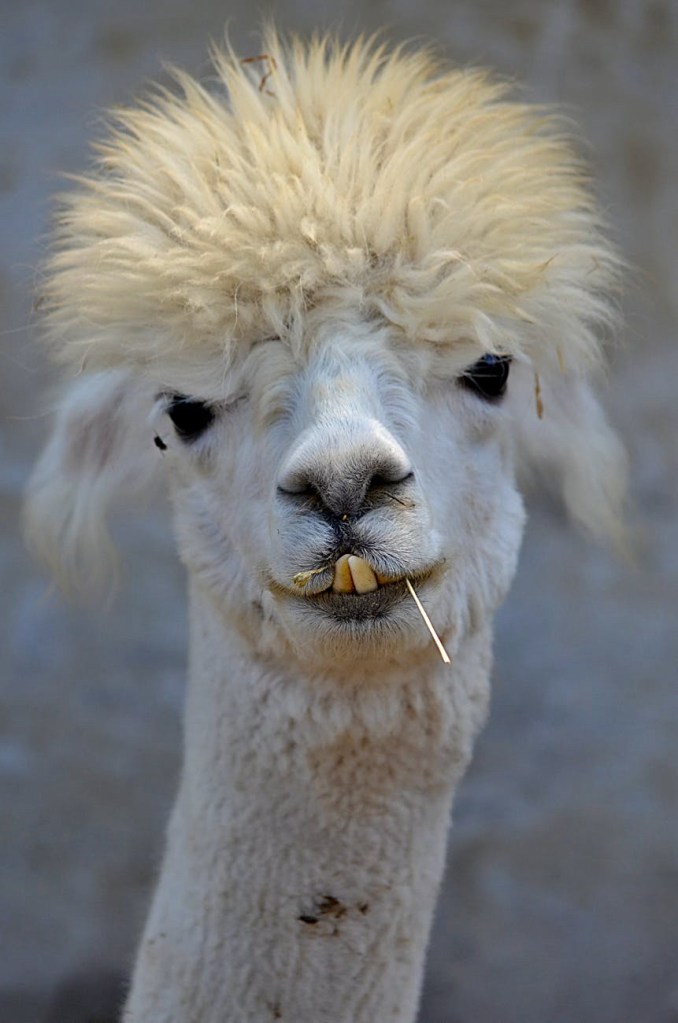

Great! Now play your favorite music. Since llama’s are associated with Peru, I recommend Peru by Fireboy DML.

Getting the model

The standard thing to do is go to Meta, sign their waiver, get the email and download the model. Even easier, just go to huggingface, click the checkbox, and download the model. Unfortunately, since everyone uses PyTorch, you won’t be able to load the model as is into MLX. Lucky for you I did the hard work. I read up on the MLX codebase and found this handy file called convert. Lowe and behold, you point it to the huggingface repo and it will do the rest for you! So I point it at right here at huggingface, specify the tensor type from the website which is denoted as dtype in the command, and viola, after the download and a few minutes of computations you will see a folder called mlx_model with your converted model weights.

python -m mlx_lm.convert --hf-path meta-llama/Meta-Llama-3-8B-Instruct --dtype bfloat16

There is also an option to upload the converted model back to huggingface. You need to join the MLX organization but fortunately for those of you reading, I did all of this already. You can find the converted model uploaded by yours truly (KD7) at https://huggingface.co/mlx-community/Meta-Llama-3-8B-Instruct.

Now you can open your python and feel like your are in PyTorch but using MLX.

from mlx_lm import load

model, tokenizer = load(“mlx-community/Meta-Llama-3-8B-Instruct”)

# or

model, tokenizer = load(“<insert your path>/mlx_model”)

There you have it. Llama 3 running on ARM based Macs at ridiculous speeds.

Pause. I’m not done

As a researcher that blends systems, formal methods, and human interaction into AI, I find myself always needed to do better than just prompt engineering. I like to use llama.cpp because they support constrained decoding via GBNF grammars. I don’t have a good blog post to link you to that will explain the concept as it’s an active research area but basically it’s a way to impose some prior knowledge on the possible outputs of the LLM. Whether you use GBNF grammars, LMQL, or some other tools, they more or less boil down to creating a weighted bias on the tokens that are predicted in Large Language Models (LLMs). For example, if I ask a simple question such as, “Is the sky blue? Only answer yes or no”, I don’t need any LLM to tell me, “I’m a helpful assistant. I looked at the sky for you and it is indeed blue. So, yes! The answer is not no.” Imagine trying to parse that nightmare of an answer. Instead, I can find the tokens of the words “yes” and “no” and provide a bias (say +100) on them so that when the we are decoding, it is so likely that we will end up seeing yes or no. Such tooling is so important than OpenAI support it in their API endpoints. Anthropic and Google do not so I rarely use them.

Also in MLX-LM is a server that lets you serve up a very basic OpenAI style endpoint. So now we can replace any OpenAI calls with a call to the local endpoint the server creates for us. However, that server needed some love. Long story short, I made a series of PRs to implement logit biases in the codebase of both direct model generate calls (so model.generate) and on the server. While I was in there, I also fixed some styling and added validations so that the sever stopped throwing 5xx errors and actually returned something useful. Here is the code that will allow you to replace OpenAI with your new MLX based sever.

from openai import OpenAI

client = OpenAI(base_url=“http://localhost:8080/v1”, api_key=“go-follow-karims-blog”)

And that is all you need to replace your GPT calls with your own Llama 3 8B (or really any LLM that you can fit) running locally on your Mac with supercalifragilisticexpialidocious speeds!

Leave a comment